Is a drug effective or not?¶

drug = [54, 73, 53, 70, 73, 68, 52, 65, 65] # treatment group

placebo = [54, 51, 58, 44, 55, 52, 42, 47, 58, 46] # control group

Where:

$ H_0 $ -> The null hypothesis: there is no difference in the drug and placebo groups on average (the drug does not have any effect).

$ H_1 $ -> The alternative hypothesis: there is a significant difference in the drug and placebo groups on average (the drug works).

I will assume Type I Error / a significance level (alpha) at 0.01.

Remarks:

- Type I Error. Reject the null hypothesis when there is in fact no significant effect (false positive). The p-value is optimistically small.

- Type II Error. Not reject the null hypothesis when there is a significant effect (false negative). The p-value is pessimistically large.

# Significance level

alpha = 0.01

Analytical Method¶

import numpy as np

# Means difference of the samples

np.mean(drug) - np.mean(placebo)

Is the means difference statistically significant?

We use T-Student sampling distribution and calculate t-test statistic to find out.

T-test assumptions:

- Both groups (drug and placebo) should be normally distributed

- Samples should come from populations with equal variances

- Samples should be of the same size

- At best there should be more than 20 observations in a sample

Assumption 1¶

Both groups (drug and placebo) should be normally distributed

import matplotlib.pyplot as plt

%matplotlib inline

import seaborn as sns

sns.distplot(drug, color="skyblue", label="Drug", kde=False, hist=True, rug="True")

sns.distplot(placebo, color="red", label="Placebo", kde=False, hist=True, rug="True")

plt.legend();

from scipy import stats

def normaltest(sample, name, alpha=0.05):

'''

Shapiro-Wilk test for samples with n < 50 observations.

Reference: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.shapiro.html

'''

W, p = stats.shapiro(sample)

#print("W = {:g}".format(W))

#print("p = {:g}".format(p))

if p < alpha: # null hypothesis: sample comes from a normal distribution

print("{} does not come from a normal distribution".format(name))

else:

print("{} comes from a normal distribution".format(name))

# Is drug normally distributed?

normaltest(drug, "Drug")

# Is placebo normally distributed?

normaltest(placebo, "Placebo")

# To solve the issue of having too small sample size...

# I could use the following: (just joking!)

import warnings

warnings.filterwarnings('ignore')

Assumption 2¶

Samples should come from populations with equal variances

def varsequals(sample1, sample2, alpha=0.01):

'''

Perform Bartlett’s test for equal variances.

Bartlett’s test tests the null hypothesis that all input samples are from populations with equal variances.

Reference: https://docs.scipy.org/doc/scipy-0.14.0/reference/generated/scipy.stats.bartlett.html

'''

T, p = stats.bartlett(sample1, sample2)

#print("T = {:g}".format(T))

#print("p = {:g}".format(p))

if p < alpha: # null hypothesis: all input samples are from populations with equal variances

print("Not all input samples are from populations with equal variances.")

else:

print("All input samples are from populations with equal variances.")

varsequals(drug, placebo)

Calculate t-statistic¶

Are our samples expected to have been drawn from the same population?

# p-value: Probability of obtaining a result equal to or more extreme than was observed in the data.

t_stat, p = stats.ttest_ind(drug, placebo) # all observations are independent

print('t={:.4f}, p={:.4f}'.format(t_stat, p))

# Calculate degrees of freedom

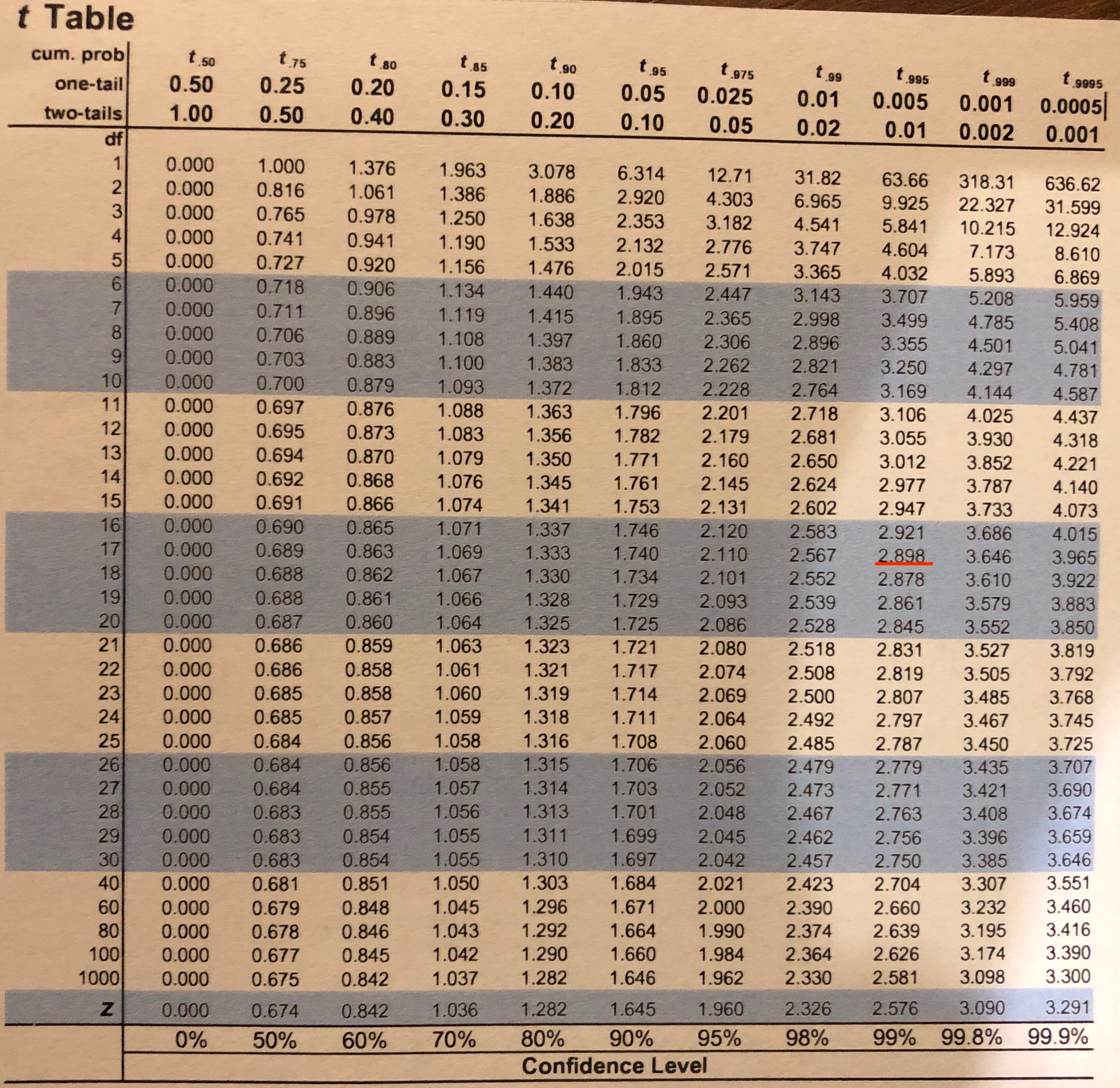

df = len(drug) + len(placebo) - 2

df

alpha

# Calculate the critical value (two-tailed test)

# PPF (percent point function)

cv = stats.t.ppf(1.0 - alpha/2, df)

print(cv)

# Confirm cv with CDF (cumulative distribution function)

#p = stats.t.cdf(cv, df)

#print(p)

# Interpret via critical value (abs for symmetric distribution)

if abs(t_stat) <= cv:

print('Accept null hypothesis that the means are equal.')

else:

print('Reject the null hypothesis that the means are equal.')

# Interpret via p-value

if p < alpha:

print('Reject the null hypothesis that the means are equal.')

else:

print('Accept null hypothesis that the means are equal.')

Hypothesis test result¶

We have sufficient evidence to reject the null hypothesis which means the drug works.

Permutation Testing (Computational Method)¶

# Python's statitics module provides functions

# for calculating mathematical statistics of numeric (real-valued) data

from statistics import mean

from random import shuffle

mean?

observed_diff = mean(drug) - mean(placebo)

print(mean(drug))

print(mean(placebo))

print(f"{observed_diff:.2f}")

# Always visually inspect your data

import matplotlib.pylab as plt

import seaborn as sns # A library for statical plotting

%matplotlib inline

sns.distplot(drug, color="skyblue", label="Drug", kde=False, hist=True, rug="True");

sns.distplot(placebo, color="red", label="Placebo", kde=False, hist=True, rug="True");

plt.legend(); # Seaborn plots are interoperatable with matplotlib

Let's assume there is no difference in means of the treatment and control group. If so, we can merge the observations from both groups and we can check how likely is that the observed_diff value or greater appears if there is no difference in the groups.

Let's check how likely is for our observed_diff value or greater to exist when doing random groups assignment from the common pool many times. If the null hypothesis is true (the drug does not work, on average group means are close to 0) we should observe many occurences of mean differences equal or greater to observed_diff. If not, then we can safely reject the null hypothesis.

drug, placebo

# Unite the data

combined = drug + placebo

combined

shuffle?

shuffle(combined) # Rearrange in-place

combined

# Randomly assign to drug and placebo group

drug_random = combined[:len(drug)]

placebo_random = combined[len(drug):]

print(drug_random)

print(placebo_random)

sns.distplot(drug_random, color="skyblue", label="Drug", kde=False, hist=True, rug="True");

sns.distplot(placebo_random, color="red", label="Placebo", kde=False, hist=True, rug="True");

plt.legend();

# Simulate it a bunch of times

n = 10_000

count = 0

simulated_means = []

for _ in range(n):

shuffle(combined)

shuffled_diff = mean(combined[:len(drug)]) - mean(combined[len(drug):])

simulated_means.append(shuffled_diff)

count += (shuffled_diff >= observed_diff)

print(f"""{n:,} label reshufflings produced only {count} instances

with a difference at least as extreme as the observed difference of {observed_diff:.2f}.""")

# The p-value is a chance of observing the current difference when there is truly no difference.

# Probability of obtaining a result equal to or more extreme than was observed in the data.

p = count / n

p

print("alpha={}, p-value={}".format(alpha,p))

# Interpret via p-value

if p < alpha:

print('Reject the null hypothesis that the means are equal.')

else:

print('Accept null hypothesis that the means are equal.')

# Interpret visually

# Find the quantile for the alpha/2 cutoff (two-tailed test)

cv = np.max(simulated_means) - stats.t.ppf(1.0 - alpha/2, df)

simulated_means = np.asarray(simulated_means)

plt.figure(figsize=(16, 8))

sns.distplot(simulated_means, color="skyblue", kde=True, hist=True, rug="True")

plt.title("Simulated Differences of drug and placebo group means for the null hypothesis", fontsize=12)

plt.xlabel("Probability Means Difference", fontsize=12)

plt.ylabel("Percent", fontsize=12)

plt.axvline(cv, color='g'); # critical value

plt.axvline(observed_diff, color='r'); # observed mean difference

Permutation test result¶

It is very unlikely that the observed_diff value or greater appeared if there is no difference in the groups, so there is a difference between the drug and the placebo groups. We have sufficient evidence to reject the null hypothesis, the drug works.

from scipy.stats import norm

def tstatistic(sample1, sample2, alpha, one_tail):

'''

Calculate t-statistic for 2 samples

Are the two samples expected to have been drawn from the same population?

'''

# Calculate means

mean1, mean2 = np.mean(sample1), np.mean(sample2)

# Calculate sample standard deviations

std1, std2 = np.std(sample1, ddof=1), np.std(sample2, ddof=1)

# Calculate standard errors

n1, n2 = len(sample1), len(sample2)

se1, se2 = std1/np.sqrt(n1), std2/np.sqrt(n2)

# Standard error on the difference between the samples

sed = np.sqrt(se1**2.0 + se2**2.0)

# Calculate degrees of freedom

df = len(sample1) + len(sample2) - 2

# Calculate the t statistic

t_stat = (mean1 - mean2) / sed

# Calculate the critical value

if one_tail:

cv = stats.t.ppf(1.0 - alpha, df)

else:

cv = stats.t.ppf(1.0 - alpha/2, df) # 2-tailed

# Calculate the p-value

p = (1.0 - stats.t.cdf(abs(t_stat), df)) * 2.0

return t_stat, df, cv, p

t, df, cv, p = tstatistic(drug, placebo, alpha, one_tail=False)

t, df, cv, p